Image credit: DALL-E 3 by OpenAI

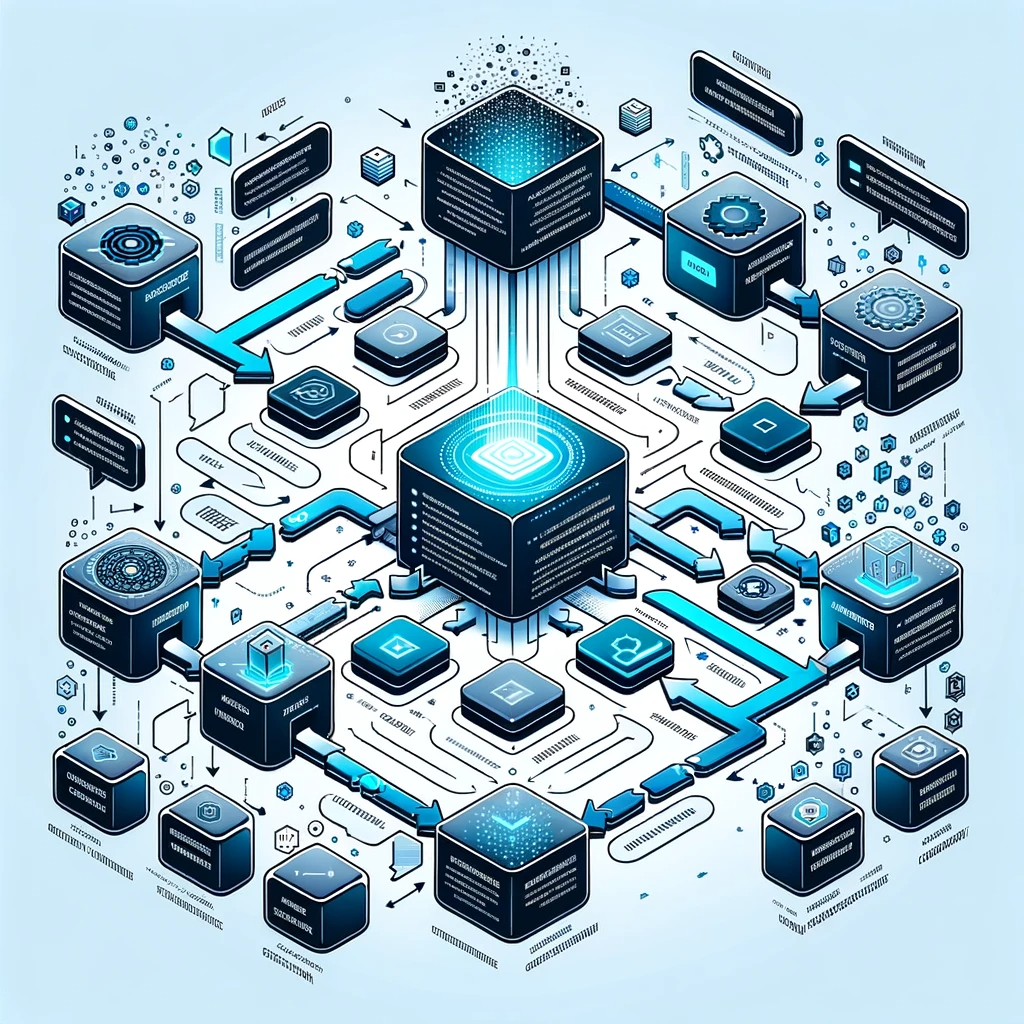

Prompt: "high-tech graphic showing multi-stage text processing"

With all the focus on chat bots, it can be easy to forget how relevant large language models are for general purpose NLP tasks. Natural Language Processing is a broad field with an extensive history of specialized tool development for specific tasks. By connecting different models together with various pre- and post-processors, it is possible to hardwire an effective, multi-stage pipeline. But if something were to change in your input data, your entire downstream processing might be affected.

With the advent of LLMs, we got a new way of constructing processing pipelines. Rather than setup discrete processing stages, we could simply pack a whole series of instructions into a single prompt. Social media quickly started filling up with examples of amazing super-prompts. So what's the catch?

Reliability!

So while LLMs exhibit a high tolerance for input variance, compared with traditional statistic methods, this comes with a downside. Rather that following instructions in a deterministic fashion, always doing the same thing each time, LLMs have a built-in need to "get creative". This creativity is essential for producing text that mimics natural language as we humans tend to express ourselves with. Within the context of a super-prompt, however, this creativity will often result in the model getting side tracked, producing output that doesn't meet the final requirements.

In the case of our helpful Altinn Assistant, this would result in the following experience:

"I'm sorry, but the information provided does not contain any details about how to ..."

Divide and conquer

Our new approach is to subdivide the primary objective into multiple intermediate objectives, giving us more control and flexibility to achieve each one, lowering the risk of pipeline failure.

Proposed multi-stage pipeline:

- Analyse the user's query to extract the most relevant search terms. This is a perfect task for an LLM.

- Send search terms to a statistical text index model to match and rank hits using traditional NLP methods, such as Term Frequency-Inverse Document Frequency

- Having identified relevant source documents from the training dataset, we can re-rank individual content sections, trimming when necessary.

- Combining our user's query with additional context from the training dataset helps to ground the query and enable in-context learning.